Local Interpretable Model-agnostic Explanations

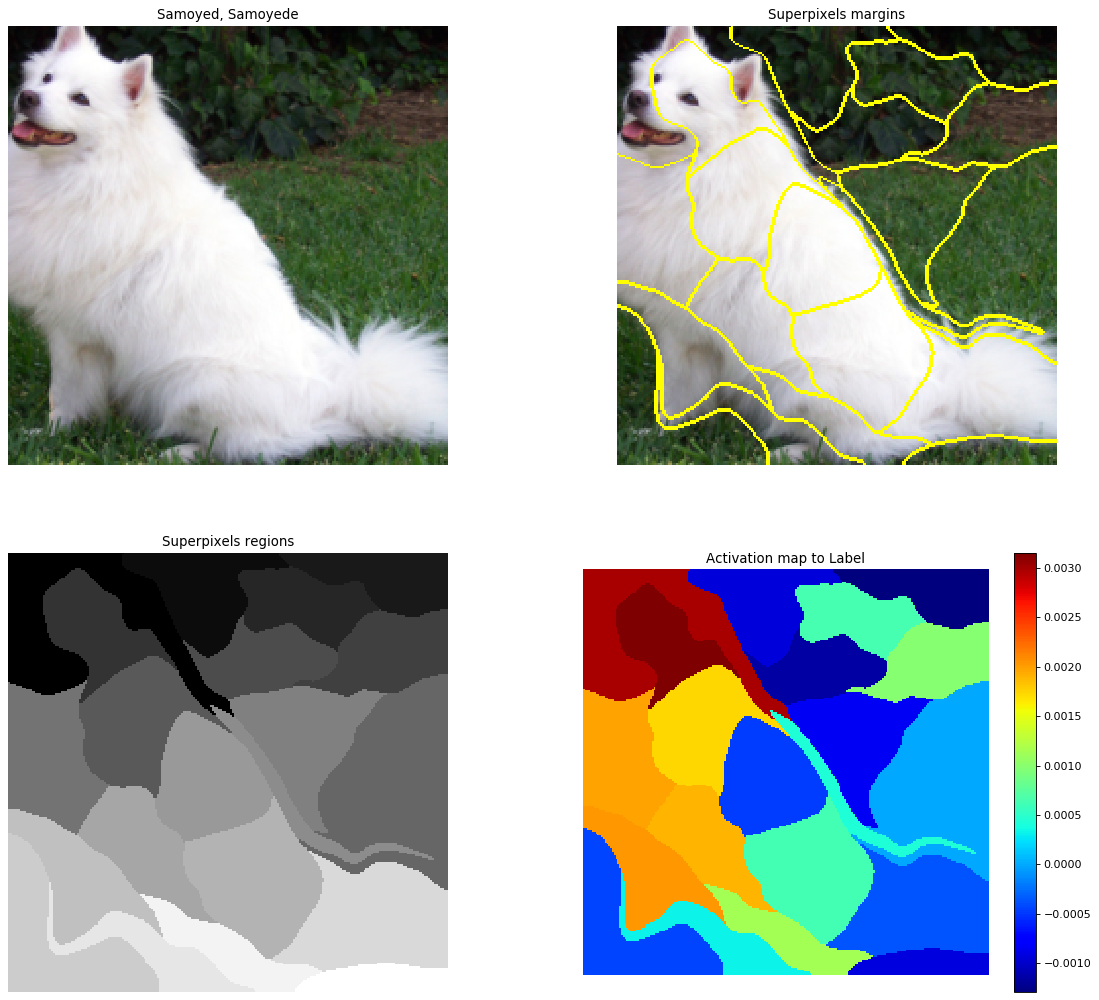

Given the original represntation of an instance being explained \(x\) (the image), we can generate a binary vector \(x'\) for its interpretable representation. In this case, \(x'\) is an one vector of the length of number of superpixels. A perturbed sample \(z'\) is a binary vector which is sampled from $x'$ randomly under the uniform distribution. Then a fake image \(z\) with less superpixels is generated.

To choose the best explanation, it must reduce the cost between the true model and approximate function, and the complexity penalty of approximate function.

$$\xi(x) = \arg\min_{g\in G}\underbrace{\mathcal{L}(f,g,\pi_x)}_{\text{cost}}+\underbrace{\Omega(g)}_{\text{complexity}}$$where

$$ \begin{align*} \mathcal{L}(f,g,\pi_x) &= \sum_{z,z'\in\mathcal{Z}}\pi_x(z)(f(z)-g(z'))^2 \\ g(z') &= w_g\cdot z'\\ \Omega(g) &= \infty\mathcal{1}[\|w_g\|_0\gt K] \\ \pi_x(z)&= e^{-\frac{1}{\sigma^2}\|x-z\|^2_2} \end{align*} $$where \(f(x)\) is the neural network model which predicts the probability of \(x\) belonging to a certain class, \(g\) an interpretable model, \(\pi_x(z)\) the proximity measure between an instance \(z\)(fake image) to \(x\)(real image), \(\mathcal{L}\) the fidelity function and \(\Omega\) the complexity measure (restricting the number of superpixels used to explain the label).

\(\mathcal{L}(f,g,\pi_x)\) measures how unfaithful \(g\) is approximating \(f\) in the locality defined by \(\pi_x\). If this term is small, it represents \(g\) faithfully approximates \(f\).

\(\Omega(g)\) is similar to a regularization term, it limits the complexity of the approximate model \(g\). If \(g\) is a random forest algorithm, it limits the depth by \(K\). If \(g\) is a linear model, it limits the number of features. Our aim is to approximate \(f(z)\) by \(g(z')\) as follows,

$$f(z)\approx g(z') = w_g\cdot z'$$Least absolute shrinkage and selection operator (lasso) is applied to compute the representation of \(w_g\). The lasso estimate (\(\hat{w}_g\)) is defined by,

$$\hat{w}_g = \arg\min(f(z)-w_g\cdot z')^2\quad\text{subject to}\quad |w_g|\lt K \\ =\arg\min_{w_g}\sum_{z,z'\in\mathcal{Z}}(f(z)-w_g\cdot z')+\lambda\|w_g\|_1$$Compared with ridge regression which only shrinks coefficients, lasso can set some coefficients to 0, retain the relatively important varaible and give an easily interpretable model.

References

- "Why Should I Trust You?": Explaining the Predictions of Any Classifier

- Local Interpretable Model-agnostic Explanations of Bayesian Predictive Models via Kullback-Leibler Projections

- A Modified Perturbed Sampling Method for Local Interpretable Model-agnostic Explanation