Policy Iteration

Value Functions

Value functions (of states/state-action pairs) are to estimate how good it is for the agent to be in a given state (/to perform a given action and state).

Consider \(p(r|s)\),

$$p(r|s)=\sum_a\sum_{s'}p(r,a,s'|s)=\sum_a\sum_{s'}p(r,s'|a,s)p(a|s)=\sum_a\pi(a|s)\sum_{s'}p(s',r|s,a)$$Expected reward of a given state,

$$\sum_r rp(r|s)=\sum_a\pi(a|s)\sum_{s'}\sum_r rp(s',r|s,a)$$Expected return when starting in s and following \(\pi\),

$$ \begin{align*} v_\pi(s)&=\mathbb{E}_\pi[G_t|S_t=s]=\mathbb{E}_\pi\big[\sum^\infty_{k=0}\gamma^kR_{t+k+1}|S_t=s\big] \\ &=\mathbb{E}_\pi\big[R_{t+1}+\gamma\sum^\infty_{k=0}\gamma^kR_{t+k+2}|S_t=s\big]\\ &=\sum_a\pi(a|s)\sum_{s'}\sum_rp(s',r|s,a)\bigg[r+\gamma\mathbb{E}_\pi\big[\sum^\infty_{k=0}\gamma_kR_{t+k+2}|S_{t+1}=s'\big]\bigg]\\ &=\sum_a\pi(a|s)\sum_{s',r}p(s',r|s,a)[r+\gamma v_\pi(s')] \end{align*} $$Interestingly, you can see the value of a state given a policy is the sum of the future state value. This represents the value of the current state is related to the future states, not the previous states. This is the Bellman equation for \(v_\pi\).

Similarly,

$$p(r|s,a)=\sum_{s'}p(s',r|s,a)$$ $$\sum_rp(r|s,a) = \sum_r\sum_{s'}p(s',r|s,a)$$The expected return starting from s, taking the action a, and following policy \(\pi\).

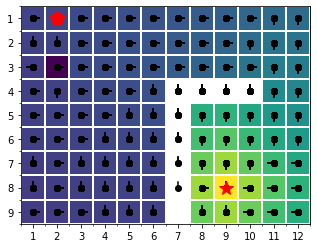

$$q_\pi(s,a)=\mathbb{E}_\pi[G_t|S_t=s,A_t=a]=\mathbb{E}_\pi\big[\sum^\infty_{k=0}\gamma^kR_{t+k+1}|S_t=s,A_t=a\big]\\ =\sum_{s',r}p(s',r|s,a)(r+\gamma q_\pi(s',a')) $$The backup diagram (existing in the book) shows the relationships that form the basis of the update, it transfer value information back to a state from its successor states.

Optimal Value Functions

A policy \(\pi\) is defined to be better than or equal to a policy \(\pi'\) for all states, i.e. \(\pi\geq\pi'\) if and only if \(v_\pi(s)\geq v_{\pi'}(s)\) for all \(s\in\mathfrak{S}\). This is an optimal policy. The optimal state-value function is defined as,

$$v_*(s) = \max_\pi v_\pi(s)$$The optimal action-value function is defined as,

$$q_*(s,a)=\max_\pi q_\pi(s,a)$$The Bellman optimality equation expresses the fact that the value of a state under an optimal policy must equal the expected return for the best action from that state,

$$v_*(s)=\max_{a\in\mathfrak{A}(s)}q_{\pi*}(s,a)=\max_a\mathbb{E}_{\pi*}[G_t|S_t=s,A_t=a]\\ =\max_{a\in\mathfrak{A}(s)}\sum_{s',r}p(s',r|s,a)[r+\gamma v_*(s')]\\ q_*(s,a) = \sum_{s',r}p(s',r|s,a)[r+\gamma\max_{a'}q_*(s',a')] $$This solution relies on at least 3 assumptions that are rarely true in practice. (1) we accurately know the dynamics of the environment. (2) we have enough computational resources to complete the computation of the solution. (3) the Markov property.

Dynamic Programming

Dynamic programming referes to a collection of algorithms that can be used to compute optimal policies given a perfect model of the environment as a MDP.

Steps

- Initialize values and policies arbitrarily.

- Update values and policies iteratively,

- Policy Evaluation

- Policy Improvement

Each iteration of iterative policy evaluation backs up the value of every state once to produce the new approximate function \(V_{k+1}\). It is a full backup since they are based on all possible next states rather than on a sample next state. If \(k\rightarrow\infty\), \(V_{k}\rightarrow V_\pi\).

$$ V_\pi(s)=\sum_a\pi(a|s)\sum_{s',r}p(s',r|s,a)(r+\gamma V_\pi(s')) \\ V_{k+1}(s)=\sum_a\pi(a|s)\sum_{s',r}p(s',r|s,a)(r+\gamma V_k(s')) $$ $$\max_s|V_{k+1}(s)-V_k(s)|<\theta$$The greedy policy takes the action that looks best in the short term -- after one step of lookahead -- according to \(v_\pi\).

$$ v_{\pi'}(s) = \max_a\sum_{s',r}p(s',r|s,a)[r+\gamma v_{\pi'}(s')]\\ \pi'(s) = \arg\max_a q_\pi(s,a)=\arg\max_a\sum_{s',r}p(s',r|s,a)(r+\gamma V_\pi(s')) $$Let \(\pi\) and \(\pi'\) be any pair of deterministic policies such that, for all \(s\in\mathfrak{S}\),

$$q_\pi(s,\pi'(s))\geq v_\pi(s)$$Strict inequality above holds for any state, the one below holds at least one state.

$$V_{\pi'}(s)\geq V_\pi(s)$$You can prove that \(q_\pi(s,\pi'(s))=v_{\pi'}(s)\).

For stochastic policy \(\pi=\pi(a|s)\),

$$q_\pi(s,\pi'(s)) = \sum_a\pi'(a|s)q_\pi(s,a)$$Policy Iteration

A sequence of monotonically improving policies and value functions,

$$\pi_0\xrightarrow{E}v_{\pi_0}\xrightarrow{I}\pi_1\xrightarrow{E}v_{\pi_1}\xrightarrow{I}\pi_2\xrightarrow{E}v_{\pi_2}\cdots v_*$$ where \(E\) is the policy evulation and \(I\) is the policy improvement.